Discuss a specific application in Robot Learning, that is, robot grabbing Robotic

Preface

One year ago, we discussed the development of Robot Learning>>Combing|The development of Robot Learning, then after nearly a year of development, Robot Learning has also made a lot of new progress, especially in Meta Learning. But today we will first specifically discuss a specific application in Robot Learning, which is Robotic Manipulation/Grasping. Why consider this issue specifically? Because this is one of the most urgent problems to be solved to reflect the intelligence of robots.

We can consider what kind of intelligence home robots need to have? It can be said that the most important thing is to have two capabilities, one is the mobile navigation capability, and the other is the grasping capability of the robotic arm. So the Fetch robot like the picture below actually satisfies the hardware required by household robots. Let’s not talk about the issue of mobile navigation today, but just talk about the frontiers of robotic grasping.

For robot crawling, there are actually many specific research problems and methods. For example, the current system for crawling No.1 is Berkeley’s Dex-Net 4.0. Friends who are interested can read this report: Exclusive: This is the most Dexterous robot ever created, but Dex-Net is not an end-to-end deep learning system. It just uses a neural network to estimate the location of the grab, and then grabs it by planning. It is essentially an open-loop control system. . Such a system is no problem for completely static and simple objects, but if the object is blocked or changed, then this system is more difficult to deal with. Therefore, given that the preference of this column is general artificial intelligence AGI, we only want to focus on one way to solve robot grabbing, that is:

End-to-End Vision-Based Robotic Manipulation End-to-End Vision-Based Robotic Manipulation

We hope that the entire robot grasping process is learned by the robot itself! This is very similar to human behavior!

So with this topic, let's take a look at who has researched in this area, basically it can be said that it is concentrated in two teams:

1) Google Brain Robotics team

2) Berkeley Sergey Levine team

In addition, Deepmind, OpenAI, Stanford's Li Fei-Fei team and CMU's Abhinav Gupta team have some eye-catching research, but they did not specifically focus on the issue of Robotic Manipulation. In fact, Google Brain team Sergey Levine is also among them, so the papers we see next are basically from Sergey Levine!

Paper List

[1] Sadeghi, Fereshteh, et al."Sim2real view invariant visual servoing by recurrent control."arXiv preprint arXiv:1712.07642(2017).

[2] Riedmiller, Martin, et al."Learning by Playing-Solving Sparse Reward Tasks from Scratch."arXiv preprint arXiv:1802.10567(2018).

[3] Quillen, Deirdre, et al. "Deep Reinforcement Learning for Vision-Based Robotic Grasping: A Simulated Comparative Evaluation of Off-Policy Methods."arXiv preprint arXiv:1802.10264(2018).

[4] Haarnoja, Tuomas, et al. "Composable Deep Reinforcement Learning for Robotic Manipulation." arXiv preprint arXiv:1803.06773(2018).

[5] Fang, Kuan, et al."Learning Task-Oriented Grasping for Tool Manipulation from Simulated Self-Supervision."arXiv preprint arXiv:1806.09266(2018).

[6] Kalashnikov, Dmitry, et al. "QT-Opt: Scalable Deep Reinforcement Learning for Vision-Based Robotic Manipulation."arXiv preprint arXiv:1806.10293(2018).

[7] Matas, Jan, Stephen James, and Andrew J. Davison. "Sim-to-Real Reinforcement Learning for Deformable Object Manipulation." arXiv preprint arXiv:1806.07851(2018).

[8] OpenAI "Learning Dexterous In-Hand Manipulation" (2018).

The above papers are roughly the most important papers directly related to robotic manipulation in the past year. Since I am mainly concerned about the results of the Sergey Levine team, I may neglect other work. If you have a good friend who has good related paper recommendations, welcome Leave a message under this article, thank you!

This article does not intend to analyze each paper in detail, but to make an overall analysis of the current research progress of robotic grasping as a whole.

The overall analysis of the research progress of robot grasping

At present, the core of the research on robot grasping or the entire robot learning lies in three aspects:

1) Algorithm level

2) Sim-to-real migration from simulation to real environment

3) Application level

The first is the algorithm level. We hope that the DRL algorithm can have higher efficiency, faster learning speed, and handle more difficult learning tasks in robot grasping. Therefore, in the above paper list, [3] evaluates a variety of DRL off-policy algorithms, and [6] uses one of them to do large-scale experiments in real scenarios. [4] uses soft q-learning to make Robots with stronger exploration capabilities [2] are studying the use of auxiliary rewards to deal with the problem of too sparse rewards in complex robot learning tasks.

Next is the migration problem from the simulation environment to the real environment. Due to the inconvenience and high cost of real robot experiments, it is almost inevitable to migrate from simulation to reality (Google's robot farm method is not something everyone can do), so many research focuses on simulation To the real migration, for example [1] study sim2real through multiple perspectives [7] study the operation of non-rigid objects in a simulation environment [3] propose a simulation benchmark for robot grasping, and the research on robot grasping can be Play a great role in promoting.

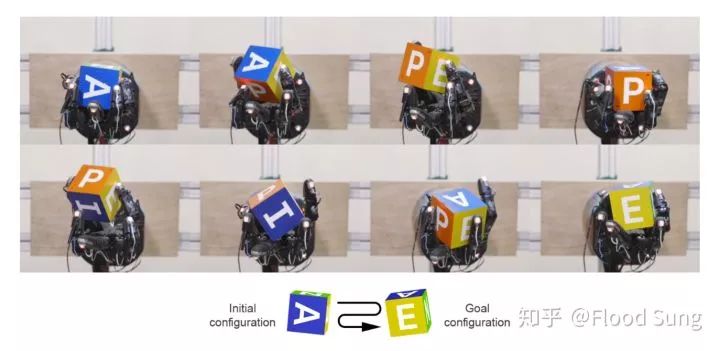

Finally, at the application level, everyone not only pays attention to the simple ordinary robot grasping problem, but also considers the more complicated manipulation problem. Therefore, with [8] OpenAI just released the results of the robot hand playing cubes, [5][7 ] Study more complex robot grasping problems.

So what are the important research advances in these three areas? Let's first analyze the latest result of OpenAI separately.

3 Learning Dexterous In-Hand Manipulation

The result of OpenAI just came out in the past two days, using DRL to realize the dexterous operation of the manipulator block. Although this work is not for grasping, the methodology is exactly the same. This article has a strong reference value for the robot grasping problem.

1) The results of this article: The DRL algorithm PPO is used to train the manipulator to play cubes in a simulation environment, and the trained model is directly transferred to the real scene to achieve success, and the entire training does not include a human demo. It is completely self-study based on the reward. Completed, and the final effect is very close to human behavior, much like human operation.

2) Reasons for such success: (1) The highly simulated system is very close to the real environment, and the reality gap is relatively small. (2) The simulation environment randomization technique is used to greatly expand the scope of the simulation environment, so that the neural network can learn Can adapt to various scenarios, of course, including real scenes. This kind of randomization includes, for example, different friction, different presentations, different camera angles, etc. (3) Large-scale distributed parallel training, using hundreds of machines with 6144 CPUs to run a distributed simulation environment to collect data, and then Using an 8GPU V100 to train the model, as the title says, is equivalent to training for 100 years.

3) Enlightenment: (1) DRL can learn through learning effects that traditional non-learning algorithms simply cannot do. End-to-end neural network must be the future of robots! (2) The simulation environment is super important. With the development of technology, simulation can definitely get better and better. This means that future robots will inevitably be trained in simulation, with low cost and high speed. Not for. (3) Large-scale calculations can directly make up for the problem of DRL sample inefficiency. Anyway, as long as there is enough equipment, it can be done if the data is piled up for a day to train him for one year.

further analysis

In the last section, we briefly analyzed the cool work of OpenAI, but the conclusion we can get is: this is more an engineering victory than an algorithm victory. The algorithm is still PPO, and there is no substantial change.

Similarly, Google’s previous important progress in robotic crawling [6] QT-Opt has greatly improved the effect of end-to-end robotic crawling, but when we analyze the algorithm in it, we will find that the algorithm is only Q-Learning. A variant of, compared to DDPG, it does not use Actor Network, but obtains actors through evolutionary algorithm CEM, which can make training more stable and facilitate large-scale distributed training.

For [6] and [8], in fact, we have discovered the importance of large-scale distributed learning for performance improvement. Of course, this is also in full compliance with the situation of deep learning. As long as there is data and high-performance computing, performance can be piled up. Since deep reinforcement learning has a much larger sample inefficiency problem than supervised learning, in fact, deep reinforcement learning must be able to work, and the amount of data required will be much larger than supervised learning such as imagenet. For this reason, we see that AlphaGo, OpenAI's robotic hands, and OpenAI's Dota all use a huge amount of computing resources. A small robot hand uses 6144 CPUs and 8 V100 GPUs for training, which is also very unprecedented.

What's next?

Two points are very predictable:

1) More and better simulation environments will inevitably come out in the future. This year's research can be said to be a clear affirmation of the feasibility of directly migrating simulation to the real environment, so there is no reason to go further to develop a more realistic simulation environment.

2) Faster and stronger distributed learning system. Needless to say, this is the fuel that drives the progress of robot learning.

With the above two points, even if you keep the existing algorithm unchanged, I believe that you can train stronger or more complex robot learning results than the current one. The end-to-end vision-based robot grasping directly pushes to more than 99% The accuracy rate is completely possible, which tends to be commercial.

So how should the algorithm level develop?

We need the traction of more complex tasks. For example, we need the collaborative crawling of dual robotic arms, which involves multi-agent robot learning. For example, we need robots to be able to complete a longer series of tasks, which requires the research of Hierarchical Reinforcement Learning. For example, we need robots to be able to complete multiple tasks at the same time, which requires multi task learning. For example, we hope that the generalization of the robot can be stronger and be able to handle objects that have not been seen. Then this requires the research of Meta Learning, so this piece is very hot now. For example, we need robots to learn new tasks quickly, which requires Continual Learning, Meta Learning, and if it is required to achieve through imitation learning, then imitation learning is required.

Here I recommend that everyone can read the questions sorted out by the first CoRL. From here, we will feel that Robot Learning is really just beginning, and the research questions are too simple.

https://docs.google.com/document/d/1biE0Jmh_5nq-6Giyf2sWZAAQz23uyxhTob2Uz4BjR_w/edit

It is only in 2018 that there is a benchmark crawled by robots. I believe that more benchmarks will appear in the future to promote the development of this field.

Final summary

This article does not analyze the specific idea of ​​each paper in detail, but rather analyzes the overall research progress of robot grasping and robot learning in a broad manner. In general, for the specific problem of robot grasping, it will be quickly seen from the industrial application point of view. It must be promoted in the research and development of simulation systems and in large-scale distributed learning. The core is indeed in engineering implementation. For academic research, the most important thing is to define a new task and a new benchmark, so as to promote the development of the algorithm level and the application level on the basis of the new task.

P3.91-7.82 Transparent LED Display

Features:

*Ventilated light

*Free air conditioning heat saving energy

*Environmental protection- it uses only a third of the power of a conventional Led screen

* Convenient installation High compatibility

* Nova MSD 300 sending card and Nova A5S receiving card

* High debugging brightness and no damage to gray scale, achieving the debugging technology for nice image.

* Passed the TÃœV,FCC,ROHS,CE cetification.

Our company have 13 years experience of led display and Stage Lights , our company mainly produce Indoor Rental LED Display, Outdoor Rental LED Display, Transparent LED Display,Indoor Fixed Indoor LED Display, Outdoor Fixed LED Display, Poster LED Display , Dance LED Display ... In additional, we also produce stage lights, such as beam lights Series, moving head lights Series, LED Par Light Series and son on...

Application:

* Business Organizations:Supermarket, large-scale shopping malls, star-rated hotels, travel agencies

* Financial Organizations:

Banks, insurance companies, post offices, hospital, schools

* Public Places:

Subway, airports, stations, parks, exhibition halls, stadiums, museums, commercial buildings, meeting rooms

* Entertainments:

Movie theaters, clubs, stages.

Transparent LED Display,Transparent Led Display,Transparent Poster Led Display,Led Screen Panel

Guangzhou Chengwen Photoelectric Technology co.,ltd , https://www.cwstagelight.com